Unity ML-Agents Challenge I

avec Sébastien Harinck

Janv 2018

Introduction

Very interested by the machine learning and Unity, we fall on this awesome a couple weeks ago. The feature looks awesome, as the potential, but how to use it in a game ?

We thought about the good old handyman in one of our favorite game serie, RollerCoaster Tycoon. This last one could mow the grass in your theme park, but it was never very efficient because he just mow around him and from case to another, ending up turning in circles at the bottom of your park (Not a smart AI).

Also today those kind of games are more 3D games, and try to avoid at maximum the grid pattern. So with a more realistic terrain without grid, how to retrieve this part of gameplay without a too much complexe hard coded AI ? Maybe the machine learning could help, and we tried it !

Objectif

To make a first try, we simplify the behavior of our handyman to a little game. You got a area full of grass, you must cut it all the most quickly possible by pass on the clods of grass with the garden robot.

Machine Learning

We started our quest with the ppo jupyter notebook.

The inputs

Our mower has 8 sensors around itself. It has 3 types of sensors :

- the distance of the first clod

- the number of clods along the sensor

- the distance of an obstacle

So our inputs is just a vector of 24.

The rewards and punishments

We tried different rewards and punishments for our mower. The best that we found for the model is this :

- each clod mowed : +0.1

- all clods mowed : +10

- a rock touched : -1

We will try to add a little punishment for each frame.

The decisions

A mower can go forward, backward, right and left. So the agent needs to return a vector of 2 (continuous values). First value is for speed and the second for the rotation. Examples :

- [-1, 0] : backward

- [0.5,0.75] : forward and right

Hyper-parameters and model

For the training succeed, we tuned the hyper-parameters.

- max_steps = 1e7

- num_layers = 4

- buffer_size = 10000

- learning_rate = 1e-6

- hidden_units = 1024

- batch_size = 2000

Increase the buffer_size and the batch_size was a good idea from this issue: https://github.com/Unity-Technologies/ml-agents/issues/288

The training

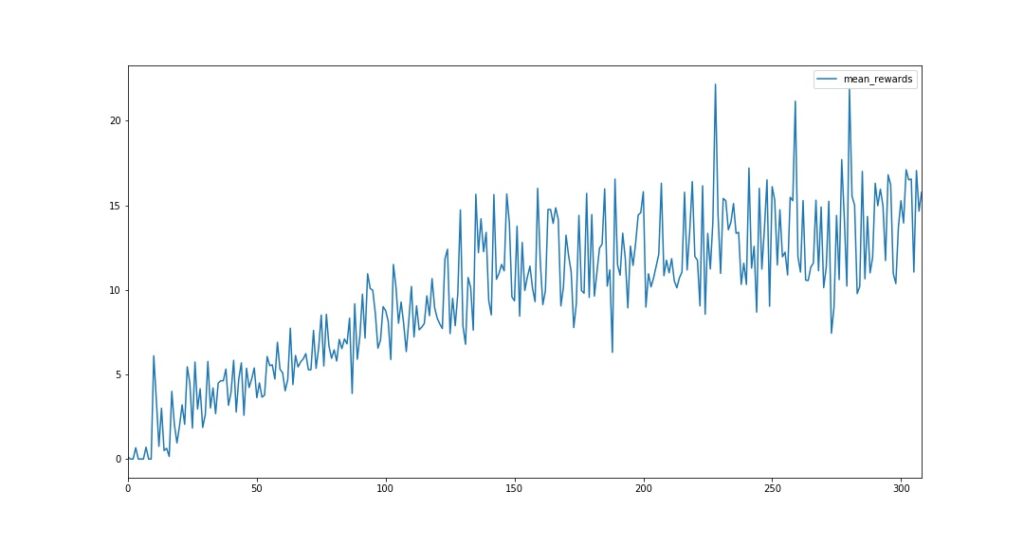

We launch several training. We turned hyper-parameters and even change the environment for it to be more easier for the agent to learn. Since we were not able to do the training on a GPU, it was very long to change something and have the result…

But we trained an agent able to mown clods and avoid rocks ! Here is the mean rewards during the training :

Conclusion

It was very fun for a first start ! We thank Unity guys who developed this new feature.

Improvements

- try the training with 2 or 3 rocks

- add a little punishment to each frame

- try with a lower model (layers and hidden units)

- try with a final score only

- try with more agents

- try a custom model (not ppo)

- try with moving obstacles

- fix the tensorboard

- fix the training on a VM linux machine with GPU in the cloud

- try to train the model with pixels